Conscious Machines

Oct 20, 2019Computers have already surpassed us in their ability to perform certain cognitive tasks. Perhaps it won’t be long till every household ...

Is artificial intelligence bound to outstrip human intelligence? Should we be excited about using AI to enhance the human mind? Or should we fear the rise of robot overlords? These are the kinds of questions we’re asking in this week’s show.

Since the 1950s, AI cheerleaders have been breathlessly shouting: The Robots are Coming! They will be conscious and free and they will be much smarter than us. But we’re still waiting, so excuse me if don’t buy into the latest hype.

Of course, I admit that it is not the 1950s anymore. It’s not even 1996 anymore. That was year IBM’s chess playing supercomputer Deep Blue first beat world chess champion Garry Kasparov. Though this was heralded by some a giant leap for computer kind and a giant step back for humankind, it turned out to be another bit of excessive AI hype. IBM shut Deep Blue down when Kasparov demanded to know how it worked. They didn’t want to reveal that the decisive move that sealed Deep Blue’s victory over Kasparov wasn’t the result of superior intelligence, as Kasparov feared, but of a bug in the software. That was not the finest moment for AI, to say the least.

Since then, of course, AI has come of age, at least in one sense: it’s everywhere—in your car, on your phone, helping doctors diagnose diseases, helping judges make sentencing decisions. And it’s getting more and more powerful and pervasive every single day. But this does not mean that an AI apocalypse is about to arrive. For one thing, last time I looked, humans were still in charge. For another thing, many people can’t wait for the day when AI finally frees us all from dirt, drudgery, and danger. That wouldn’t be an AI dystopia, but an AI utopia.

But it would be naïve if we confidently expected that AI would necessarily usher in a utopia. Indeed, AI just might make us humans completely obsolete. There are already things that AI can do faster, cheaper, safer and more reliably than any human. Indeed, that is precisely why it has become so ubiquitous. But that raises a troubling question. What if for anything we can do, they can do it better? Wouldn’t that mean that we humans should just give way to the machines?

Of course, that raises a crucial question. AI is intended to be a tool. It’s not our master, so even if we could create a race of robot overlords, why on earth would we? The answer to that last question is not at all obvious. Perhaps unbridled scientific curiosity, or unrestrained economic greed, or maybe sheer stupidity could lead us down that path. Whatever the case, it’s a path we’d be pretty foolish to take, as John Stuart Mill warned back in the 19th century when AI wasn’t even the stuff of science fiction, let alone science fact. As Mill put it on his On Liberty:

Among the works of man, which human life is rightly employed in perfecting and beautifying, the first in importance surely is man himself. Supposing it were possible to get houses built, corn grown, battles fought, causes tried, and even churches erected and prayers said, by machinery—by automatons in human form—it would be a considerable loss to exchange for these automatons even the men and women who at present inhabit the more civilized parts of the world, and who assuredly are but starved specimens of what nature can and will produce. Human nature is not a machine to be built after a model, and set to do exactly the work prescribed for it, but a tree, which requires to grow and develop itself on all sides, according to the tendency of the inward forces which make it a living thing.

Mill has a very deep point. The only question worthy of our consideration in contemplating whether to deploy the sort of AI that might supplant the human being is what we humans become in a world dominated by such AI. Does the deployment of AI enhance human life and the human being or does it diminish human life and the human being?

Fortunately for us, the robots aren’t about to take over any time soon. The robot apocalypse still remains the stuff of science fiction! And some people might believe that that is where it will always remain. In in reality, they say, AI will never be able to do philosophy, make scientific discoveries, write stories—at least not better than humans can! Read one of those computer-generated stories and you will quickly come to appreciate that the human brain is better at spinning a narrative than any computer could.

Clearly there is something special about the human brain. And you don’t have to be a dualist who believes that the immaterial soul is the seat of consciousness to think that. Our brains are the biological product of millions of years of evolution, and they’re finely tuned to do distinctively human things. So why think that we can replicate all that in mere machines?

The problem is that the human brain is itself just a fancy of hunk of meat. If a fancy hunk of meat can be conscious, why deny that a fancy collection of computer chips can be? Although I don’t buy the excessive hype about AI, I am not at all skeptical about the potential of AI. Its potential is, I think is practically unlimited. But we’re nowhere near tapping that potential of AI. Indeed, we’re a long way from figuring out how the brain produces consciousness, creativity, or compassion. If we can’t figure out how our own "wetware" does it, why think we’ll be able to program software to do it?

The bottom line is that at the very least, it’s way too soon to say the robot revolution has finally arrived. But that doesn’t mean that artificial intelligence isn’t a big deal. Even if it falls short of replicating full human consciousness, it still has the potential to disrupt our world, for better or worse, on a massive scale. And that alone means we need to do some hard thinking about whether to welcome or resist that disruption.

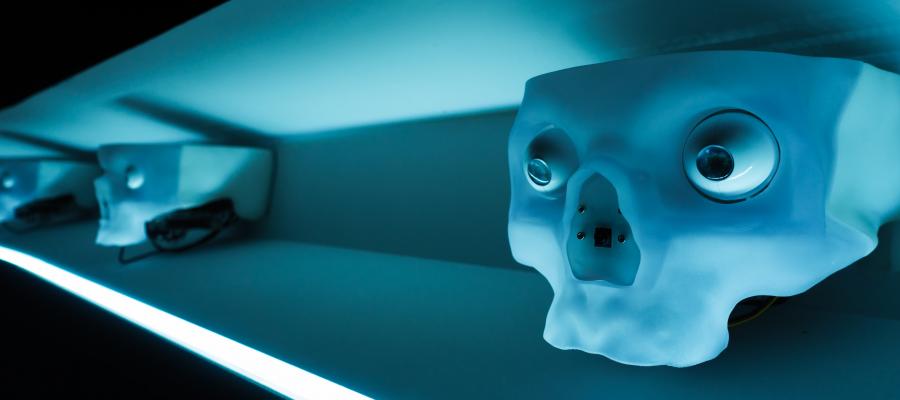

Photo by Alessio Ferretti on Unsplash

Comments (7)

Harold G. Neuman

Sunday, October 27, 2019 -- 12:56 PM

In another comment on thisIn another comment on this topic, I quoted what I called Searle's Assertion regarding minds and machines. It still makes the most sense to me, given our limited knowledge of the laws of physics. Of course, there is always the chance of discovery of new ones. I guess I'm just not keen on that, nor convinced that it would change the 'core definition' of life. Well, we are allowed to make mistakes.

Tim Smith

Saturday, December 7, 2019 -- 12:30 PM

Embodiment is the issue notEmbodiment is the issue not actual consciousness. We are all conscious. In a sense, the universe is conscious.

The issue with machines is what will be their body. What will be their sense of the one and identity.

Tim Smith

Thursday, May 12, 2022 -- 9:55 AM

Mary Roach has a remarkableMary Roach has a remarkable little anecdote where a woman reaches orgasm by brushing her teeth. Human sexuality is a mystery, and the point is missed somewhere between Bonobo and Gorilla or Chimpanzee and Baboon. The idea of consciousness is lost when considered on an evolutionary scale instead of the instantiation observed. Salvation can be found from the view that orgasm is not essentially the same between Bonobo, Human, or Gorilla.

Roach's anecdote ends with the woman abandoning the toothbrush altogether for mouthwash, concluding the devil has possessed her. Possession is where the trouble starts with many efforts to create machine consciousness. Humans may parent AI, speaking as a parent here, once the light bulb goes on, it's lights out. I am proud of my kids but take little satisfaction in my parenting or credit.

Harold G. Neuman

Friday, May 13, 2022 -- 9:15 AM

The newer post on machineThe newer post on machine consciousness was not open to further comment. What I have read lately has not moved me much. Speculations seem rampant now concerning what machine circuitry may be capable of processing. Panpsychism is wildly popular. Consciousness is everywhere? Should robots be treated as property, or would that be ethically wrong, in denying them rights they would / should have as sentient beings? I suppose this is exciting---isn't it? I mean, it must be, right? Consciousness is hallucination? (the opposite of panpsychist doctrine). Can we have it both ways like particle behavior in quantum theory? So, even if we can have it so, what do we DO with that? I am pleased that this is exciting. Aren't you?

Harold G. Neuman

Tuesday, June 14, 2022 -- 8:30 AM

A Further Act, in the TheatreA Further Act, in the Theatre of the Absurd:

Yesterday, there was news of achievement of sentience in a robot. Presently, another flash admitted the 'originator(s)' of this feat were just joking around. Not good news for the AI world. But was it bad news? Hmmmmmm.

Harold G. Neuman

Wednesday, June 22, 2022 -- 7:35 AM

Been dropping my line inBeen dropping my line in hereto uncharted waters. One such blog originates from a prestigious school in Europe. It is a different fellowship of thinkers who inhabit this stream; a different sort of commenters, as well. There are specialties and generalities and the overall participation is cordial---with a few curmudgeonly souls like myself. AI is getting a lot of attention. Am getting new perspective on that.

Tim Smith

Wednesday, June 22, 2022 -- 9:42 AM

This is an interesting one -This is an interesting one - https://www.oxfordpublicphilosophy.com/blog

Was this the one?

I'm interested in what you are seeing. I'm not sure why you don't mention it by name. A machine consciousness submit there would have traction as would any of other topics, including the "long view" and Davidson's propositional attitudes - which, from your posts, are resonating with you.